Introduction to Neural Image Captioning

Image Captioning is a damn hard problem — one of those frontier-AI problems that defy what we think computers can really do.

This summer, I had an opportunity to work on this problem for the Advanced Development team during my internship at indico. The work I did was fascinating but not revolutionary as I was attempting to understand and replicate the work of researchers who had published recent success stories of hybrid neural networks explaining photos.

Enjoy text that was created by my generative caption model. Its implementation was inspired by Google’s SHOW AND TELL: A NEURAL IMAGE CAPTION GENERATOR, an example of a hybrid neural network.

The project extended over several weeks, which included precursory learning on how to implement common neural network architectures using Theano (a symbolic-math framework in the Python programming language) and finally reading papers and creating models directly related to the image caption task.

A post about process

While the point of this is not to be a tutorial, I am going to explain my process of dividing the problem into several mini-projects, each with increasing difficulty, that would get me closer to the final goal of creating a model that generated a description of a scene in a natural photo. In this post, we’ll discuss how to rate the relevance of an image and a caption to one another. I’ll be providing my code with examples for you to follow along. If you are curious about how to go from reading a research paper to replicating its work, this post will satisfy.

A two-part post about gratitude

More importantly, I want bring to light the fact that I stood on the shoulders of giants to be able to accomplish this feat. There are incredibly smart people in the world and some of them happen to also be very generous in making their ideas, tools, models, and code accessible. I, for one, am so grateful that communities of people working on these amazing projects:

- share findings in research papers, detailed for an undergraduate intern like me to understand and hope to replicate!

- maintain open source tools like Theano to easily construct high-performing models

- provide pre-trained models via download or API as building blocks for bigger systems

- release their research source code for anyone to freely reuse in their own projects or experiments

The things brilliant people are willing to share are invaluable to the current generation of people pushing the boundaries in fields like machine learning.

Project 1. Rating how relevant an image and caption are to each other

Scoping what parts to tackle first was important in my journey to automatically caption images.

At the time, I reasoned that generating sentences was a difficult task for my skill-set. I postponed generating any form of language for Projects 2 and 3, which you can read about in Part 2 of this post. Thus, I decided to approach a slightly different problem with the same image caption dataset: ranking the most relevant captions for a photo.

One version of the task goes like this: You have one photo and a list caption candidates. You’re going to sort the text captions by how relevant they are to the image.

The other version of the task is finding the most relevant image from a list of image candidates. Both framings of the task are supported by the end deliverable of project 1: a joint image-text embedder.

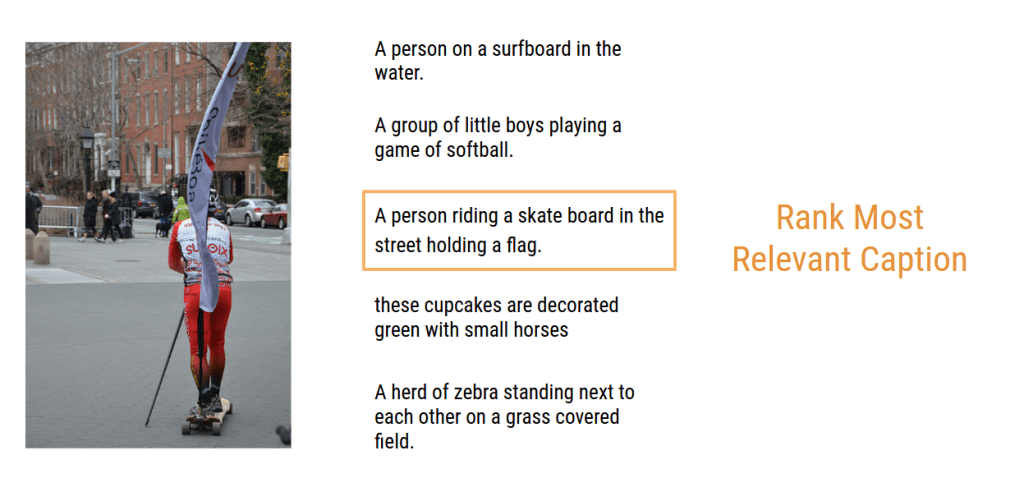

An example of selecting the most relevant caption from a list given an image

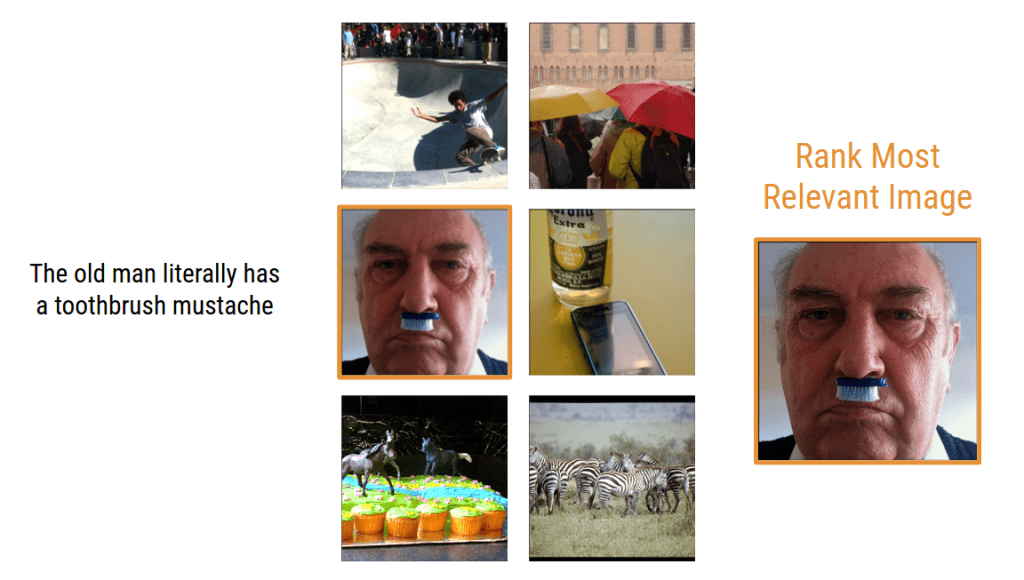

An example of selecting the most relevant image from a list given a caption

How it works: Training an encoder using related and contrastive visual-semantic pairs

You start with a large dataset of images with their accompanying captions. Many of the best datasets for this task have been collected by asking roughly 5 labelers to describe an image, giving a healthy amount of diversity to the captions. I used the most recently released dataset for this challenge, the Microsoft Common Objects in Context (MSCOCO). It contains more than 80,000 images, each with at least 5 associated captions. MSCOCO provided more than enough data fuel to train a supervised learning algorithm to relate captions with images.

The model I chose to implement came from the first half of a paper called “Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models.”. I’ll do my best to describe the gist of how the encoder model works.

The purpose of this model is to encode the visual information from an image and semantic information from a caption, into a embedding space; this embedding space has the property that vectors that are close to each other are visually or semantically related.

Learning a model like this would be incredible. It would be a great way to relate how relevant an image and caption are to each other. For a batch of images and captions, we can use the model to map them all into this embedding space, compute a distance metric, and for each image and for each caption find its nearest neighbors. If you rank the neighbors by which examples are closest, you have ranked how relevant images and captions are to each other.

Okay, now imagine that two black boxes existed which had a whole bunch of knobs on them. They represent the image encoder and caption encoder. How do we tune the knobs so that a related image and caption pair will be close in the embedding space, while the encoded vectors for an unrelated image and caption will have a large distance between them?

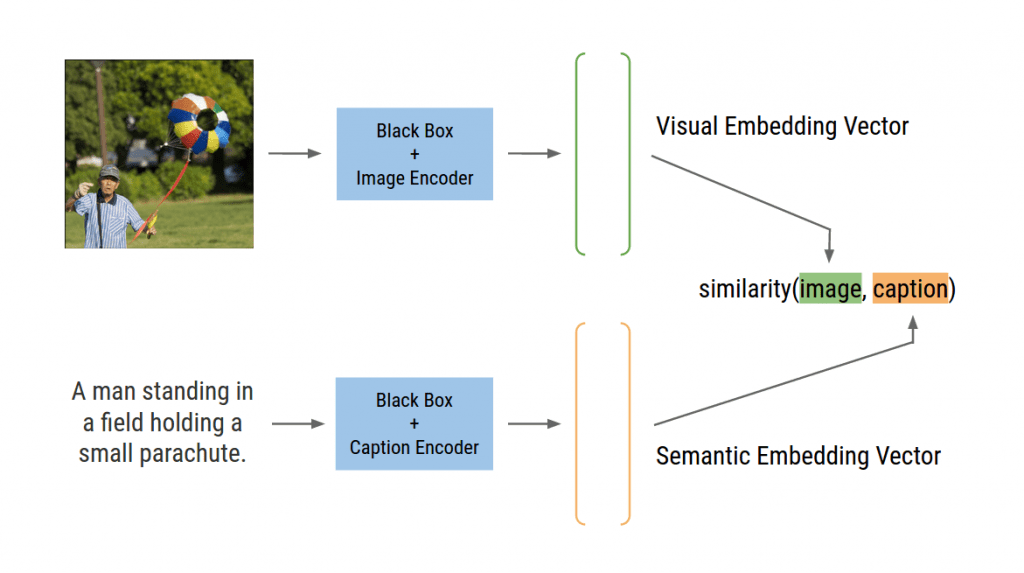

A system diagram of the image encoder and caption encoder working to map the data in a visual-semantic embedding space

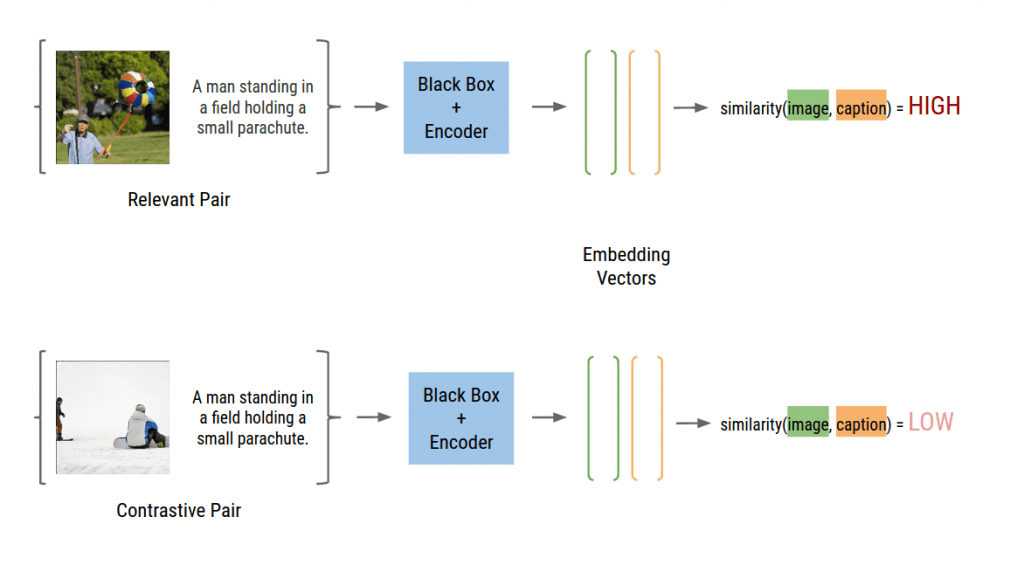

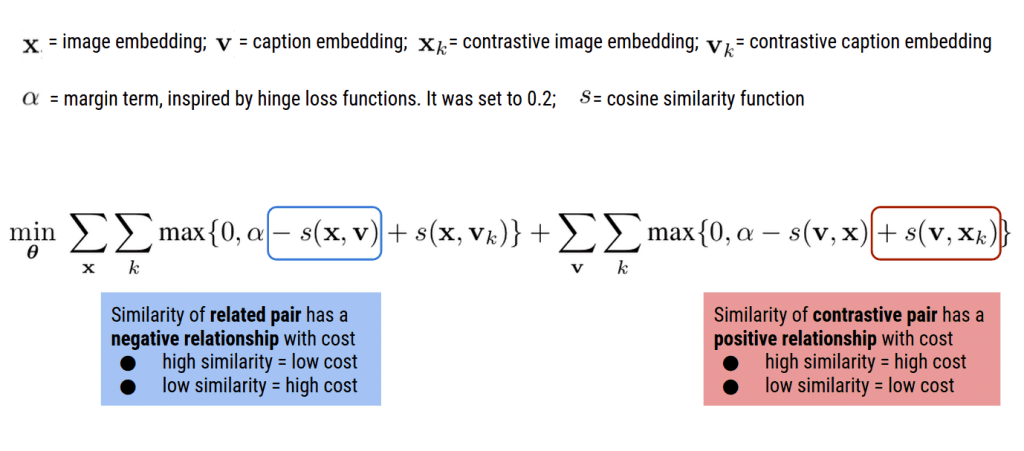

The answer is that we write a cost function that respects this property, between related and contrastive visual-semantic pairs. If the similarity between related pairs is high, make the cost small, or vice-versa. If the similarity between contrastive pairs is low, make the cost small, or vice-versa.

The pairwise ranking cost function in the form of a diagram

Then, compute the entire cost for a batch in the training dataset and use backpropagation and a gradient-descent-based algorithm to tune the knobs to make this cost low. This is the essence of learning to minimize a pairwise-ranking cost.

The pairwise ranking cost function in its mathematical form. For more information about the loss, see the DEVISE PAPER: DEEP VISUAL SEMANTIC EMBEDDINGS which uses this combination cosine-similarity and hinge loss cost.

How it works: Encoding Images into an Embedding Space

My colleague, Luke Metz, wrote a little bit about the problem of converting images into a meaningful embedding space. Here’s an excerpt from his Visualizing with t-SNE blog post describing content-preserving feature extraction of images:

Converting images into some representation that preserves the content of the image is a hard problem. These features strive to be invariant (they do not change) under certain constraints. For example, is a cat still a cat depending on the quality of a photo? Does the location of the cat in the image matter? A zoomed in version of a cat is still a cat. The raw pixel values may change drastically, but the subject stays the same.

There are a number of techniques used to make sense of images, such as SIFT or HOG, but computer vision research has been moving more towards using convolutional neural networks to create these features. The general idea is to train a very large and very deep neural network on an image classification task to differentiate between many different classes of images. In the processes of learning to classify, the model learns useful feature extractors that can then be used for other tasks.

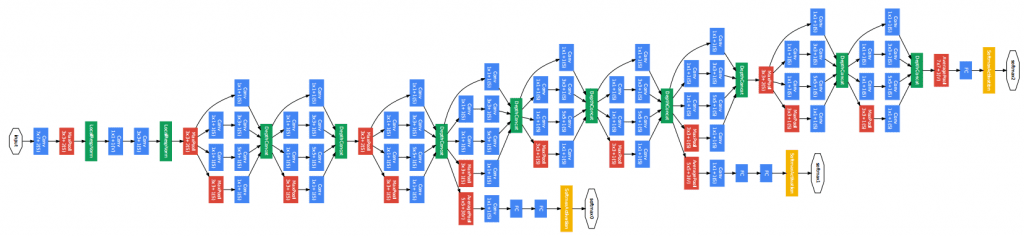

GoogLeNet in all its glory. While the convolutional feature extractor I used was not based off of the GoogLeNet, it has such a pretty visualization of what a deep neural network looks like that I couldn’t help but include it. Any instance you see the GoogLeNet architecture diagram, just substitute in “Arbitrary Awe-inspiring Convolutional Neural Network”

The embeddings learned using a convolutional neural network have the analagous properties to the visual-semantic embeddings the image-caption encoder wants to learn: images close in the space look visually similar.

Unfortunately, building useful embeddings using convolutional neural networks from the ground-up can be intractable. Luke details this dilemma:

Sadly, these techniques require large amounts of data, computation, and infrastructure to use. Models can take weeks to train and need expensive graphics cards and millions of labeled images. Although it’s highly rewarding when you get them to work, sometimes creating these models is just too time intensive.

The good news is that some people in the machine learning community have made these pre-trained models available, so others won’t have to deal with the problems associated with having not enough data, computation, or infrastructure. Many are built using stacks of convolutional layers trained on the large classification task called ImageNet Large Scale Visual Recognition Challenge (ILSVRC) which contains 1.2 million photos and 1000 labeled classes. indico has a very intuitive image features API which I ended up using to compute convolutional embeddings.

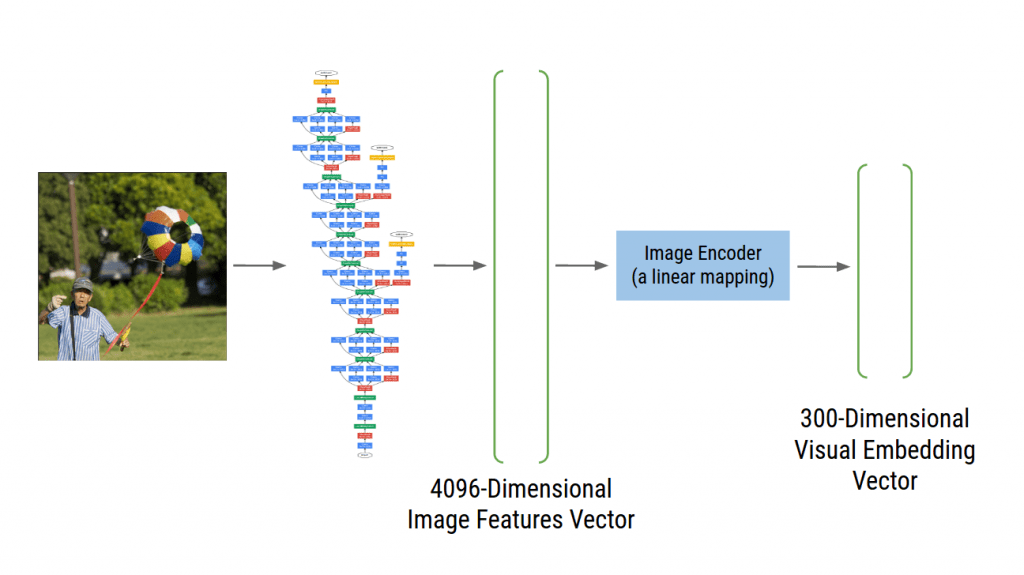

The end result is a 4096 dimensional feature vector for any image which is preserving of visual structure and meaning. I’m going to call the model that turns image-pixel-grids into the 4096 dimensional feature vector the “Convolutional Image Features Extractor”.

The image encoder for the image captioning model linearly maps the convolutional features from a 4096-D to a 300-D space. The 300-D space will be the visual embedding space used to match images with relevant captions.

Pipeline diagram from taking an image pixel-grid and transforming it into the visual embedding space used in the visual-semantic embedding model

How it works: Encoding Sentence Captions into an Embedding Space

While the goal of processing captions is similar to the image encoder — turn a sentence into a 300-dimensional semantic vector — the model to accomplish this task is fundamentally different.

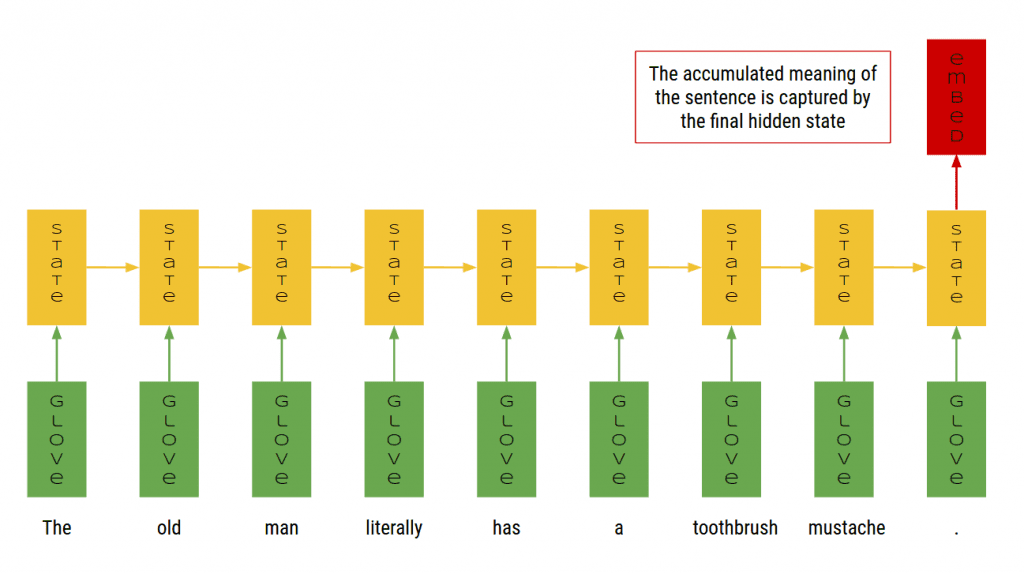

In the same University of Montreal paper, “Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models.”, the captions are modeled by a recurrent neural network.

I won’t go into too much detail about recurrent neural networks, as there are already great resources on the topic and they understand and explain the interworkings much better than I can! My favorites are “General Sequence Learning using Recurrent Neural Networks” by Alec Radford and “The Unreasonable Effectiveness of Recurrent Neural Networks” by Andrej Karpathy.

The important part is that recurrent nets can take sequences as input and can store some type of “state”; think of this “state” as its knowledge of the earlier parts of the sequence it has seen. This model formulation fits very well sequences like natural language, where the words earlier in the sentence probably contain context that informs the meaning based on the current word and future words. Recurrent neural networks have experienced many successes processing written language and we can intuit a little now about why that is the case.

The second technical detail pertains to what we actually feed in as input to the recurrent neural network. The words in the sentence need to be encoded into some mathematical representation that the recurrent net can perform computations with. Word embeddings are another term for these vector representations. We could learn these mappings from words to word vectors during the whole image-captioning training process, but we don’t have to (neither did the paper). Using a pre-trained model of word-embeddings is a good alternative. There are two commonly used pre-trained word-embedding models: Google’s Word2Vec and Stanford’s GloVe: Global Vectors for Word Representations. I chose to use the GloVe vectors, for no strong reason.

The word embeddings space is pretty cool. Not only does synonymous words appear close in this embedding space, but some linear substructures exist too. What this means is that the addition and subtraction between two vectors can hold meaning. For example, if you did the computation:

king - man + woman

in the word-embedding space, you would find that this vector is close to the vector representation for queen!

These types of examples are supposed to highlight the impressive structure GloVe word embeddings uphold. This type of linear substructure does not necessarily hold for all types of word embeddings, not do they have to be in order to be effective.

The point is that dense continuous-valued word embeddings are a great building block if we want to learn embeddings that capture the semantics of entire sentences.

Note that a model like GloVe was trained on 6 billion different words tokens, on a corpus that included Wikipedia and Gigaword 5th edition datasets. Learning continuous bag-of-word models like GloVe would have taken weeks to train — an enormous amount of time and effort which would have put Project 1 on a standstill. So thank you Stanford NLP group for offering your pre-trained GloVe vectors for the use of developers like myself!

For the image-caption relevancy task, recurrent neural networks help accumulate the semantics of a sentence. Strings of sentences are parsed into words, each of which has a GloVe vector representation that can be found in a lookup table. These word vectors are fed into a recurrent neural network sequentially, which captures the notion of semantic meaning over the entire sequence of words via its hidden state. We treat the hidden state after the recurrent net has seen the last word in the sentence as the sentence embedding.

Pipeline diagram from taking a caption string and transforming it into the semantic embedding space used in the image-caption embedding model

The GloVe word embedding vectors used were 300-dimensional, as was the hidden state of the recurrent neural network. The output sentence embedding vector is in the same 300-dimensional space as the image embedding vector, which enabled the computation of their cosine similarities. Finally, I used a variant of the typical recurrent unit, called a Long-Short-Term-Memory (LSTM) unit. RNN Variants such as LSTM’s or Gated Recurrent Units (GRUs) have desirable properties which help it learn faster and more consistently than a vanilla recurrent neural network. You can learn more about these variants via Alec Radford’s talk, “General Sequence Learning using Recurrent Neural Networks” talk or reference many of the articles in Jiwon Kim’s “Awesome Recurrent Neural Networks” resource page.

Implementation: Not too difficult in retrospect!

I wouldn’t say implementing Project 1 was a piece of cake from here, but it was a ton more doable. The final tasks were to implement:

- an image encoder — a linear transformation from the 4096 dimensional image feature vector to a 300 dimensional embedding space

- a caption encoder — a recurrent neural network which takes word vectors as input at each time step, accumulates their collective meaning, and outputs a single semantic embedding by the end of the sentence.

- A cost function which involved computed a similarity metric, which happens to be cosine similarity, between image and caption embeddings

These tasks were accomplished without much headache, for the following reasons:

- a linear transformation is effectively a weight matrix multiply, which is one the simplest operations one can do in any machine learning model implementation. [This is what a linear transformation looks like in Blocks].

- I had code for the recurrent neural network in Blocks/Theano, which I had used in the task of sentiment analysis. This problem is closely related to the caption encoder, as both take sequence input (words of a sentence or paragraph) and return a single output (sentence embedding vector or sentiment score).

- The cost function included computing norms of vectors, sums, subtractions, and multiplies — all very common array operations in scientific python. It ended up being even easier than I expected when I stumbled upon a code example by Ryan Kiros, one of the researchers who published the paper, with the same pair-wise similarity cost function written in Python/NumPy.

Source Code!

I hope I’ve intrigued you enough to be hungry for more details! Please take the learnings I’ve presented here to create something awesome of your own.

- Python Code to my ranking encoder implementation. Everything is written in Blocks/Fuel, a framework that helps you build and manage neural network models using Theano.

- Demo! IPython Notebook that demonstrates Phrase-based Image Search, an excellent application of the image caption embedding models. I inputted the example phrase “in the sky” and the images it returns are of airplanes, kites, birds, etc. flying in the sky!

Here’s a sneak peak of the 50 lines (with white spaces!) that defines the top-level encoder brick written in Blocks. It makes me so happy to see it be so simple.

from blocks.bricks import Initializable, Linear

from blocks.initialization import IsotropicGaussian, Constant

from blocks.bricks.recurrent import LSTM

from blocks.bricks.base import application

class Encoder(Initializable):

def __init__(self, image_feature_dim, embedding_dim, **kwargs):

super(Encoder, self).__init__(**kwargs)

self.image_embedding = Linear(

input_dim=image_feature_dim

, output_dim=embedding_dim

, name="image_embedding"

)

self.to_inputs = Linear(

input_dim=embedding_dim

, output_dim=embedding_dim*4 # times 4 cuz vstack(input, forget, cell, hidden)

, name="to_inputs"

)

self.transition = LSTM(

dim=embedding_dim, name="transition")

self.children = [ self.image_embedding

, self.to_inputs

, self.transition

]

@application(

inputs=['image_vects', 'word_vects']

, outputs=['image_embedding', 'sentence_embedding']

)

def apply(self, image_vects, word_vects):

image_embedding = self.image_embedding.apply(image_vects)

inputs = self.to_inputs.apply(word_vects)

# shuffle dimensions to correspond to (sequence, batch, features)

inputs = inputs.dimshuffle(1, 0, 2)

hidden, cells = self.transition.apply(inputs=inputs, mask=None)

# last hidden state represents the accumulation of word embeddings

# (i.e. the sentence embedding)

sentence_embedding = hidden[-1]

return image_embedding, sentence_embedding