“Cutting edge.” It’s usually one of those overused phrases scattered all over company websites to add some marketing pizazz.

Everything you see here is on the cutting edge.

Yet, it’s perfect for describing Sentiment HQ, one of our newest machine learning models.

If you’re not too sure what “sentiment analysis” is, it basically involves using a computer algorithm to decide whether the opinion expressed in a piece of text is positive, negative, or neutral. It’s also called “opinion mining”.

So, how is Sentiment HQ on the cutting edge, and how does it compare to our original Sentiment Analysis model? Well, it all comes down to…

Speed vs. accuracy

When it comes to predictive modeling, there’s always a trade-off between efficiency and accuracy, and there are times when simpler models are more appropriate for given problems.

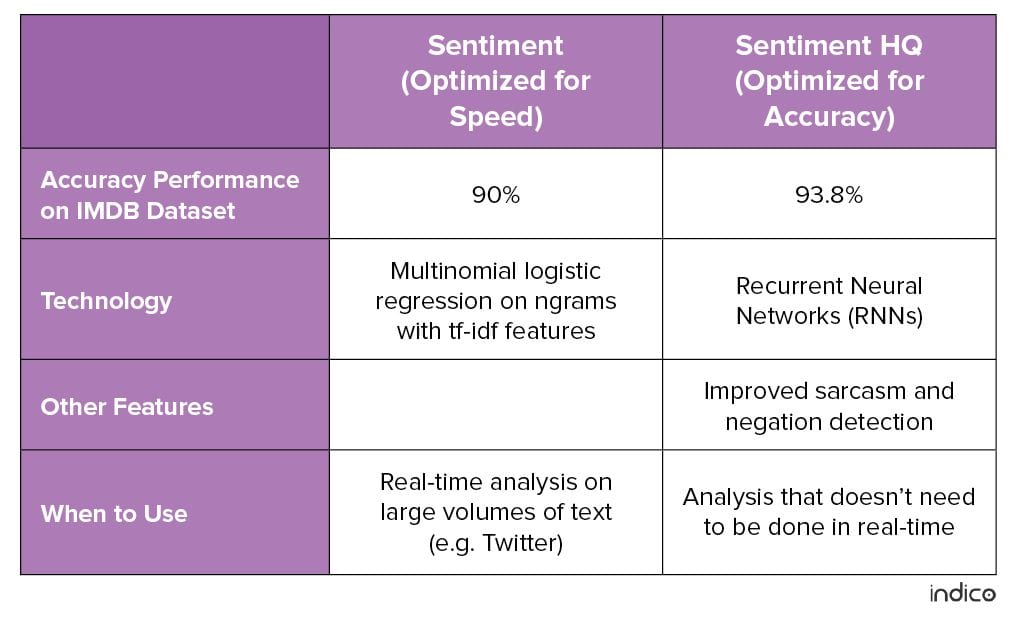

Let’s start with Sentiment HQ. Here’s why it’s special: Sentiment HQ set the new accuracy standard for sentiment analysis at 93.8 percent on the IMDB Movie Review academic dataset, and is better at detecting clear sarcasm and negation. Before this, the highest accuracy achieved for this task on the same dataset was 92.76 percent in November 2015 by Andrew M. Dai and Quoc V. Le.

What’s even more impressive is that Sentiment HQ wasn’t trained on the IMDB dataset (typically, models are trained and tested on the same dataset), showing that it has a truer understanding of sentiment. Plus, unlike the model created by Dai and Le, Sentiment HQ is a tool built specifically to enable those without machine learning expertise to use it. Awesome.

Because it’s a more complex model, Sentiment HQ processes results a little slower than our original Sentiment model, which performs at 90 percent accuracy. Sentiment HQ is still generally the way to go, unless you’re planning to do real-time analysis of more than 10 pieces of text. For instance, say you’re on the campaign team of one of the presidential candidates. You need to analyze how people on Twitter feel about your candidate’s responses during the debate so you can suggest a change in tactics mid-debate if necessary. In this case, speed is more important than accuracy, and the original Sentiment model would be the right choice.

What’s the tech?*

*We get a bit deep in the weeds with the technical stuff here, so if it’s not for you, just skip to the next section where we compare the performance of Sentiment HQ and the original Sentiment model.

Sentiment uses multinomial logistic regression on ngrams with tf-idf features. Let’s break that down in English:

- Multinomial logistic regression is a classification model used to predict the probability of an outcome or class for the dataset.

- Ngram features keep track of the presence or absence of short text phrases.

- tf-idf, or “term frequency – inverse document frequency” basically tracks the number of times a specific word appears in a document and divides it by the number of documents it occurs in to determine the importance of a term (e.g. the importance of “the” vs. “machine learning”).

So…what does that all amount to? Like I mentioned above, there’s always a trade-off between efficiency and accuracy. For this model, logistic regression is efficient and works well at scale. This is why this model is so good for quickly scanning through uncomplicated pieces of text, like tweets.

Sentiment HQ uses Recurrent Neural Networks (RNNs). RNNs can work with sequences of vectors, and are therefore better able to learn and understand contextual information by themselves. The model reads through a sequence of text, and after each word, modifies its understanding of the document. It learns to preserve contextual information of text, whereas n-grams focus on frequency and largely ignore word order.

Let me elaborate.

Traditional machine learning models for text analysis use “bag of words” representations. (A representation is a method of translating non-numerical data — like text or images — into a form that can be processed by a machine learning model.) Using a bag of words representation means that you only know which words are present and not the order in which they’re posed, so it’s a bit like alphabet soup. You have a blend of things used to form sentences, but you can’t really construct the original sentence because you’ve lost all the important information about what goes where.

RNNs, on the other hand, read text similarly to the way humans do, making it better for generalizations and understanding relationships between words in sentences. That’s why Sentiment HQ is much better at recognizing clear sarcasm and negation.

Let’s test.

Let’s see the two sentiment analysis models in action.

You’ll need your API key in order to run the following code — if you don’t have one, you can sign up for a free indico account. I’ll be presenting the code in Python. If you prefer to work with Ruby, Java, PHP, node.js, or R, visit our docs for more information.

Install indico

First, if you haven’t already installed the indicoio PyPi package, you can easily do so using pip.

pip install indicoio

If you have any trouble installing the indicoio package, here are some solutions to common installation issues.

Running Sentiment

Run the following code in your Python interpreter to see how Sentiment performs.

import indicoio

indicoio.config.api_key = "YOUR_API_KEY"

#Our batch analysis function allows you to analyze multiple pieces of text at once

indicoio.sentiment([

"indico is so easy to use!",

"What a great week: my bike was stolen, I lost my keys, and now you've ruined my expensive painting.”,

"I wasn’t super impressed with that movie."

])

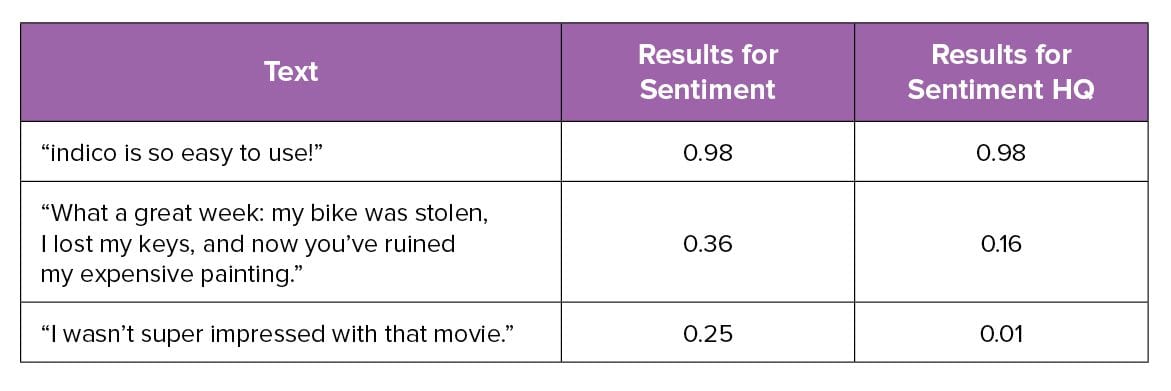

Your results should look like this:

[0.9782025594088044, 0.35564507928276173, 0.24836305578391962]

The Sentiment API will return a number between 0 and 1. This number is a probability representing the likelihood that the analyzed text is positive or negative. Values greater than 0.5 indicate positive sentiment, while values less than 0.5 indicate negative sentiment.

Running Sentiment HQ

Run the following code in your Python interpreter to see how Sentiment HQ performs.

#Skip these two lines if you ran the code above

import indicoio

indicoio.config.api_key = "YOUR_API_KEY"

#Our batch analysis function allows you to analyze multiple pieces of text at once

indicoio.sentiment_hq([

"indico is so easy to use!",

"My car broke down on the highway. What a fantastic day.",

"I wasn’t super impressed with that movie."

])

Your results should look like this:

[0.9816458225250244, 0.1646454632282257, 0.014736365526914597]

Let’s compare the results.

As you can see, Sentiment HQ did a better job of picking up sarcasm and negation than the original Sentiment model. However, if you need to do real-time analysis, then the Sentiment model that’s optimized for speed may be the way to go.

Go ahead and change those sentences to whatever you want, and run both models again!

To sum it up…

Here’s a handy chart comparing the differences between the two models.

Not sure whether you need to optimize for speed or accuracy? Email contact@indico.io and we can help you figure out whether Sentiment or Sentiment HQ is the best solution for you.

Not sure whether you need to optimize for speed or accuracy? Email contact@indico.io and we can help you figure out whether Sentiment or Sentiment HQ is the best solution for you.