Last month Alec Radford and I had the great pleasure of attending the SIGGRAPH 2015 conference in Los Angeles. If you don’t know about SIGGRAPH, here’s a quick snippet from their website: “Since its beginning in 1974 as a small group of specialists in a previously unknown discipline, ACM SIGGRAPH has evolved to become an international community of researchers, artists, developers, filmmakers, scientists, and business professionals who share an interest in computer graphics and interactive techniques.” – See more: here!Overall, SIGGRAPH was an incredible experience and I wanted to share a few of my favorite tidbits with you as the ideas coming out of the conference were all really cool.

One: The focus on work flow

One trend that was made apparent over and over again in a multitude of different contexts was the focus on work flow and efficiency. Behind every blockbuster hit there exists teams of researchers and software engineers that enable artists to create as fast and as flexibly as possible. This concept is something the machine learning community is missing. By saying this I don’t mean to downplay the amazing work done on existing libraries. You all are awesome and you are a major driving force that is pushing the field forward. In general, however, the tools for the media based pipelines are orders of magnitude much more developed.

Much of the ML field is dominated by researchers wanting to create and publish the next new great thing and this is necessary to allow the state-of-the-art. That being said, the energy needed to create and modify existing tools often far outweighs the time needed to implement their algorithm in an existing framework. When researchers do put work into tools, amazing things come out. Take a tool like Theano. I would argue that it is by far the most advanced machine learning tool available to us. It redesigned how we write and think about programming and by doing so enabled new work flows and empowered the research community to do crazy things such as the sequence to sequence recurrent neural network models with attention. I believe that this is just the beginning of what can and what will be done.

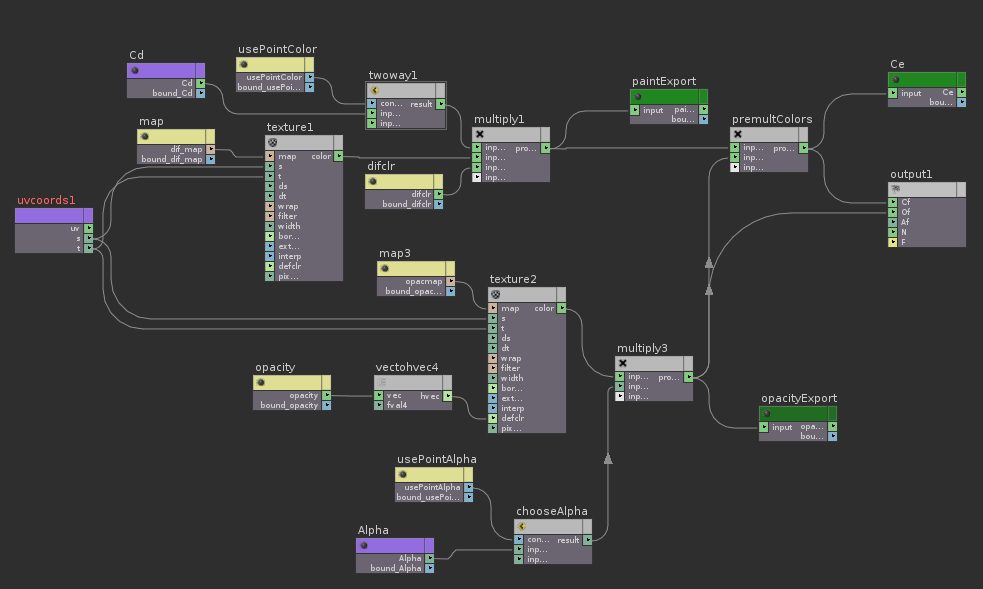

A product that caught my eye is Houdini, a special effects rendering and simulation software by Side Effects Software. Houdini, like most graphics programs, exposes a clean interface to artists to allow them to create complex effects using node-based programming language.

Many programmers, including me, are quick to scoff at visual programming claiming that you cannot be more efficient or more powerful than plain text. Houdini challenges that. These node based tools can be used by artists to craft materials and shaders that can also be used by software engineers to create things as advanced as fluid solvers almost from the ground up. The core is a method to manipulate a graph. Sometimes building graphs via code is more complicated than composing the graph visually. But since the graph is compiled, one can still achieve fast, if not faster, run times than the more traditional text based approach as well.

It was surprising to me how similar this programming style is is to a machine learning tool like Theano. Both involve constructing a graph and both compile that representation into a an executable program. The only difference is in work flow, or how one builds a graph.

Two: Augmentation techniques and general image processing

Data augmentation has been shown to greatly improve image classification model performance. The basic idea is to apply transformations that do not change the contents of the images but change how the models view of it. For example, I can take a picture of a cat from multiple different angles. The cat is the same, but the model sees very different images of that cat. Techniques used today include color shifts, lens distortions, rotations, and other basic photo manipulation effects. I believe that these effects are just the beginning. Two techniques for human controlled color manipulation in images were introduced this year: Palette-based Photo Recoloring and Data-driven Color Manifolds.

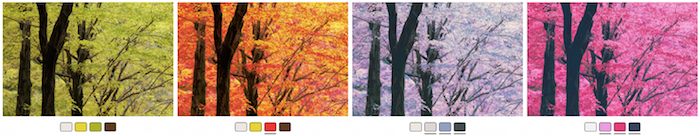

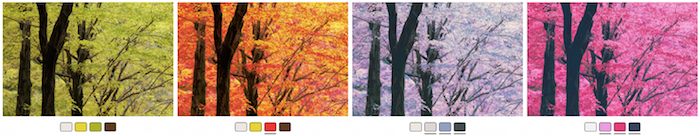

Data-driven Color Manifolds constructs a low dimensional manifold that the user can move though to change the appearance of the image.

Palette-based Photo Recoloring constructs a palette of some fixed size for users to modify colors in a more natural way. A user can change any of these swatches and the effect will propagate in a realistic manor.

Three: Applications of large scale image models

One presentation uses techniques similar to what we use here at indico, Learning Visual Similarity for Product Design with Convolution Neural Networks, showing an interesting use case for how these large neural networks can be applied to everyday items and tasks. The paper is trying to solve a two part problem. First, given a photo of a product for sale, it can find examples of the product being used in real houses (i.e., a photo “in context”) to help buyers get a sense what works and what looks good or doesn’t work stylistically with the product. Second is the reverse. Given a picture of a product or many products in a context, link back to the product photo so the user can purchase the items.

They trained on data from Houzz labelled with Amazon Turk. For a model they used a very large googlenet based model with a combination categorical loss plus Siamese loss penalty to both perform classification on product type as well as similarity between products in context and clean product photos.

Conclusion

There are a huge number of interesting papers I did not touch upon that can be found here. One of my favorite things about SIGGRAPH is how open it is to other fields and the culture is one that is always pushing forwards.