Machine learning is an incredibly frothy space. It almost seems like every week there’s some new company using machine learning in some revolutionary new way. With all of the deep learning, data mining, and artificial intelligence buzzwords floating around, it can be extremely difficult to separate the signal from the noise.

This is not one of those articles. Machine learning is an incredibly tough subject, and if the goal was to inundate you with buzzwords and technical detail until you believed I was qualified to talk about machine learning it would be simple. What’s much harder is doing the opposite. I’m going to take a piece of machine learning, in this case sentiment analysis, and explain what it’s good for, what it’s not, and what to watch out for without using a single line of code.

What is sentiment analysis?

At the highest level it’s taking a piece of text and determining whether it is positive or negative. It’s something that humans do automatically, so it can be difficult to appreciate how difficult it is to teach a computer to do the same. Let’s grab an example from the classic Hutzler 571 banana slicer:

As shown in the picture, the slicer is curved from left to right. All of my bananas are bent the other way.

As a human, I can easily tell that this is a negative review. If the banana slicer is facing the wrong way, then it’s clearly broken. Amazon confirms this – it’s a two star review.

But here’s the question:

How did I know this was a negative review?

There are no clear negative words in the review. If they had said something like, “this is an awful banana slicer,” then I could have guessed that since someone used the word “awful”, it’s a very negative review. When it comes to more subtle phrases like the one above, it can be very difficult to say exactly why I know it’s a negative review. Even though I’m certain that it is negative, it is very hard for me to explain why it’s negative.

How do I teach a computer to do that?

The short is that it’s not easy. Realize that as humans, we approach the problem with all sorts of knowledge that a computer doesn’t have. To a computer, that review looks like this:

01000001 01110011 00100000 01110011 01101000 01101111 01110111 01101110 00100000 01101001 01101110 00100000 01110100 01101000 01100101 00100000 01110000 01101001 01100011 01110100 01110101 01110010 01100101 00101100 00100000 01110100 01101000 01100101 00100000 01110011 01101100 01101001 01100011 01100101 01110010 00100000 01101001 01110011 00100000 01100011 01110101 01110010 01110110 01100101 01100100 00100000 01100110 01110010 01101111 01101101 00100000 01101100 01100101 01100110 01110100 00100000 01110100 01101111 00100000 01110010 01101001 01100111 01101000 01110100 00101110 00100000 01000001 01101100 01101100 00100000 01101111 01100110 00100000 01101101 01111001 00100000 01100010 01100001 01101110 01100001 01101110 01100001 01110011 00100000 01100001 01110010 01100101 00100000 01100010 01100101 01101110 01110100 00100000 01110100 01101000 01100101 00100000 01101111 01110100 01101000 01100101 01110010 00100000 01110111 01100001 01111001 00101110

So, we have to go from that to “this is negative”. As a human we know that those 0s and 1s are letters. We know that when you combine those letters they form words, and when you put those words together they create sentences. Those sentences can express how we feel about a particular topic. The human mind goes through an extraordinarily complex process to figure this all out and make an extremely accurate prediction, and we don’t even realize it.

We’ve been trying to teach computers how to do this for over a decade now and we’re… making progress.

How do we know we’re making progress?

Excellent question! So, to figure out if we’re making progress we need to set a goal. When you hear that someone hit 94% accuracy, what’s that out of? What’s 100% accuracy?

For such a complicated problem, the answer is actually quite simple. Basically, this is what we do:

It takes 5 minutes just to scroll to the bottom.

It takes 5 minutes just to scroll to the bottom.

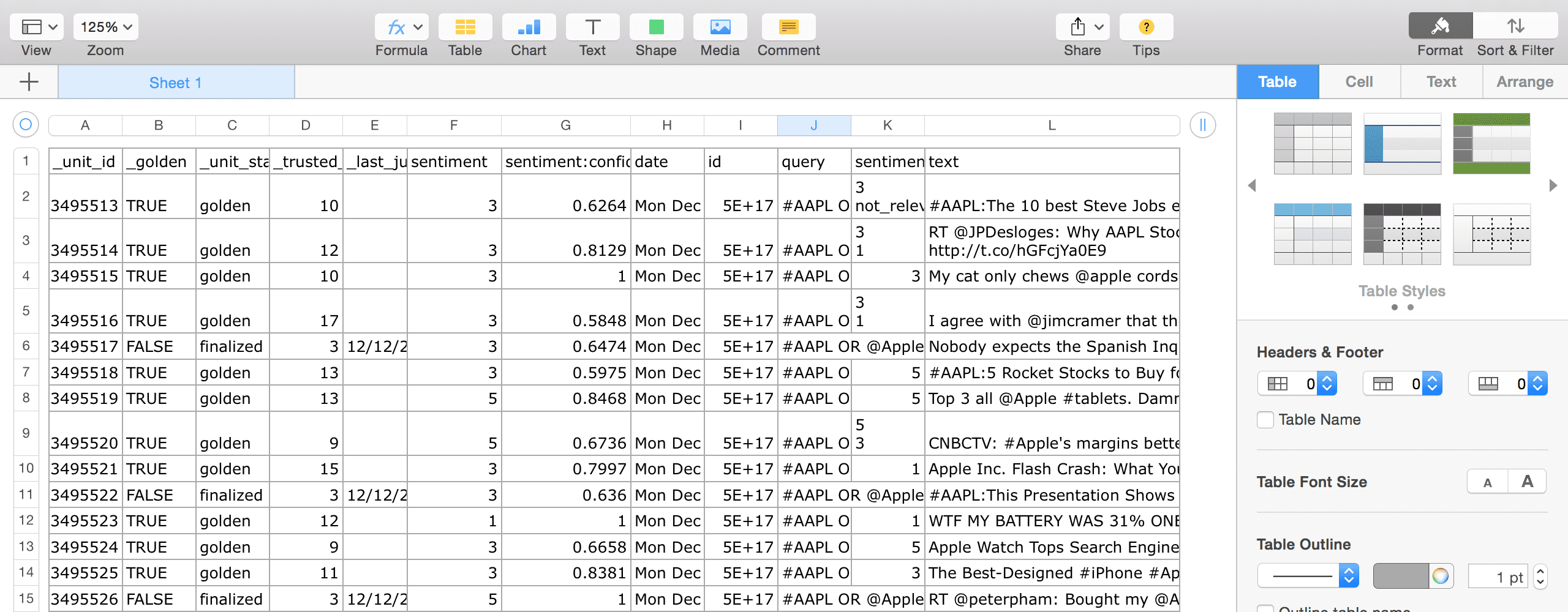

We go through a very long spreadsheet. In this case, it’s filled with tweets. We manually decide whether each one is positive or negative, and we write the answer down. I can not emphasize enough how painful this is:

People earning six figures a year scroll through a spreadsheet reading tweets and deciding whether they are positive or negative. For weeks at a time. This is not a joke.

This is called “labeling data”, and it is probably the single largest limiting factor for machine learning today. We have people do it, we say that’s what 100% accuracy is, and then we have the computer do it. The more the computer agrees with the person, the more accurate we consider it to be. Seems straightforward, right?

Why it’s not quite that simple

It turns out that not all data is created equal. Predicting sentiment is a very different problem depending on whether the text you’re making predictions on consists of Amazon reviews, tweets, or medical journals. It also depends how much data you’ve got.

It’s really easy to predict the sentiment of a single news article. It’s much, much harder to predict the sentiment of 100,000 tweets.

To make things that much more complicated, we also have to deal with the learning process. When you teach a computer what sentiment is, you end up showing it a huge number of examples. Depending on the data you’ve got, the number of examples you might use range from a few hundred to hundreds of millions.

So what’s the problem? Well it’s not really fair to use those examples when you check your model’s accuracy. After all, you already know the answers.

Like this, but with computers.

To make things even more complicated, there’s the whole question of domain. The thing is that there are SO MANY different kinds of text. Each one has a different “sentiment” associated with it. “Continuous cleavage” means something very different in a geologic context than it does in everyday speech. No model is going to be the best at every kind of text. If you ever end up maintaining a sentiment analysis API, here’s a quick sampling of the kinds of things people are going to ask you to determine the “sentiment” of:

- Scientific journal articles

- UN proceedings

- Facebook posts

- Cat pictures

- Pitchfork reviews

- The number 7 (less positive than 3)

- Obama

- War and Peace

Again, this is not a joke. People ask us to classify things like this every day. When you’re handling hundreds of requests each second (this is the scale that indico deals with, other companies deal with very different amounts of data) you really see it all.

A model that is really good at analyzing Facebook is probably not that good at analyzing Nature articles

Personally I like to consider myself pretty up-to-date with the world at large. I’m an early adopter of a lot of technology, and am always looking for a new artistic TV show… but I just learned who the Kardashians were last week. In machine learning terms you might say I “overfit”. I “failed to generalize” to examples outside of my “training set”. In human terms you might say that I’ve been living under a rock. I may have gotten really good at recognizing everything under my rock, but there is a very big world out there.

You’re telling me this isn’t a phone?

This “overfitting” problem is very well known and has been studied in incredible detail. The question of “How do I get this computer to generalize?” is one that has existed for as long as machine learning itself, and to be perfectly honest it’s still one of the biggest problems in machine learning.

Let’s get real

What are the affects of this in the real world? Well it means that it’s almost impossible to put together an accurate measure of how “good” we are at sentiment analysis. Most commercial systems for sentiment analysis explicitly prohibit the use of their products to assess accuracy. The reason for this is that any measure of accuracy is subject to bias. Any company can put together a series of tests that makes their sentiment analysis look more accurate than everyone else’s. It just happens to be extremely dishonest, and in many cases illegal.

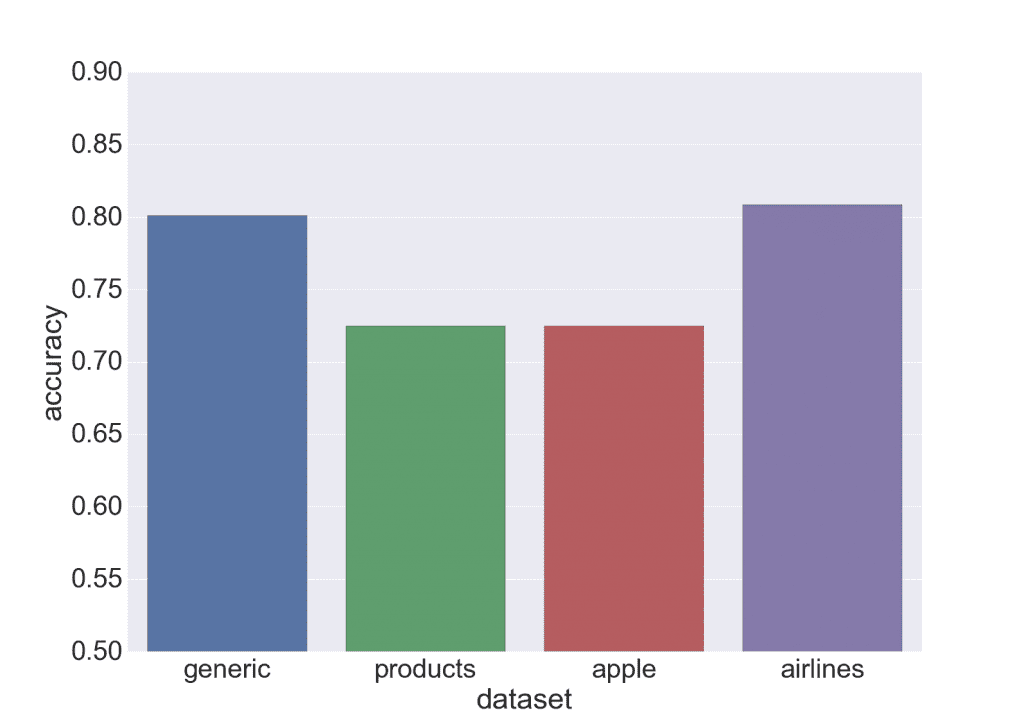

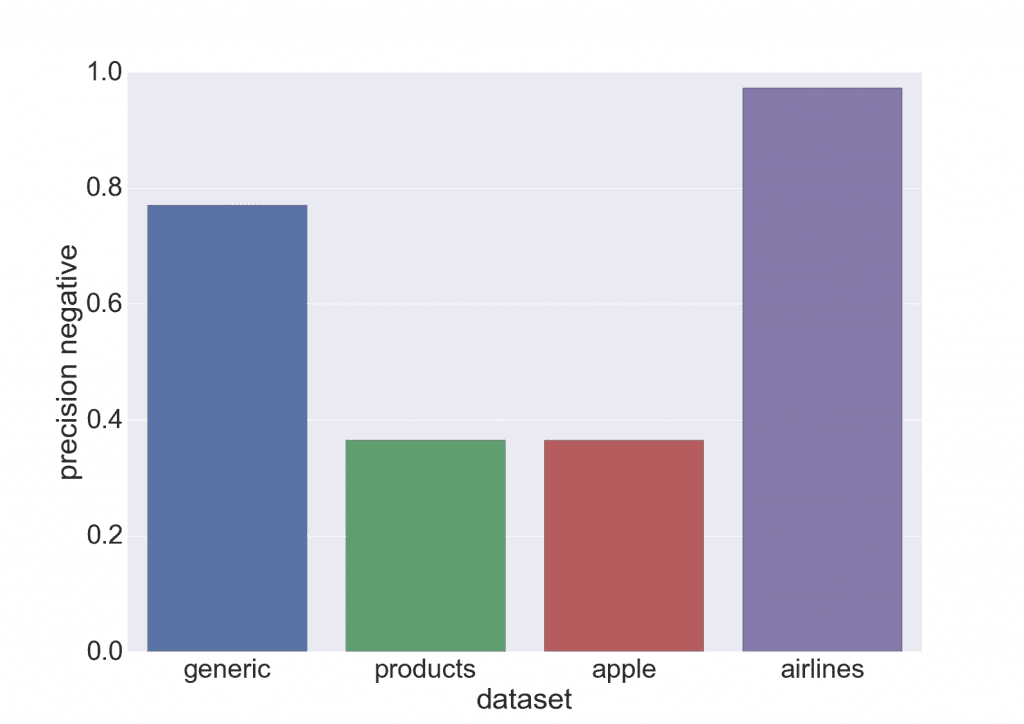

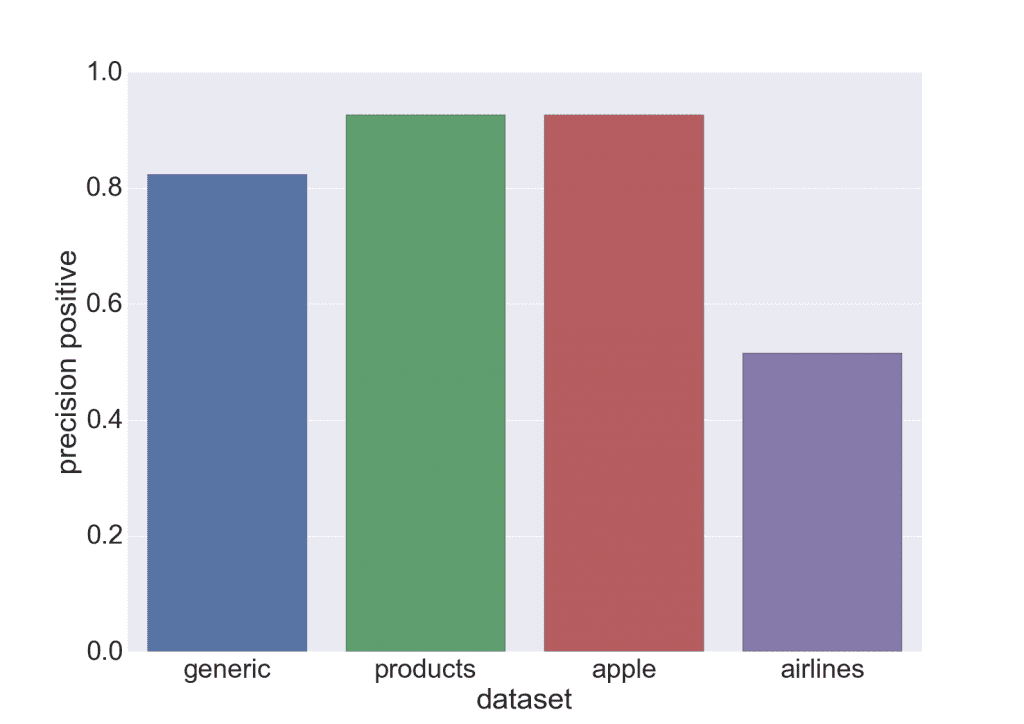

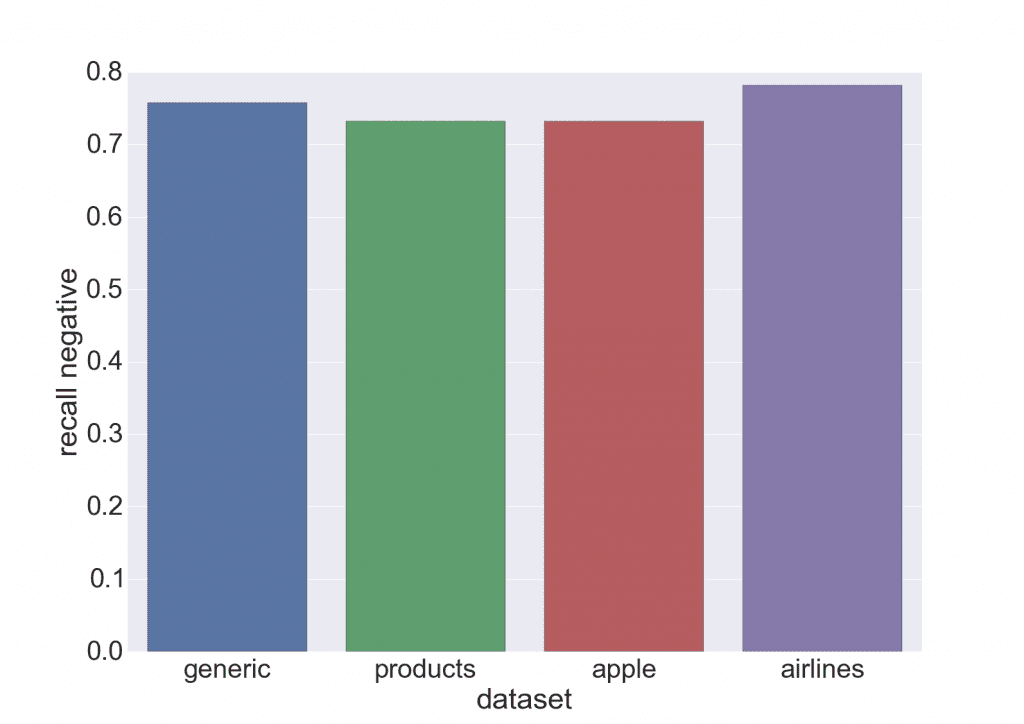

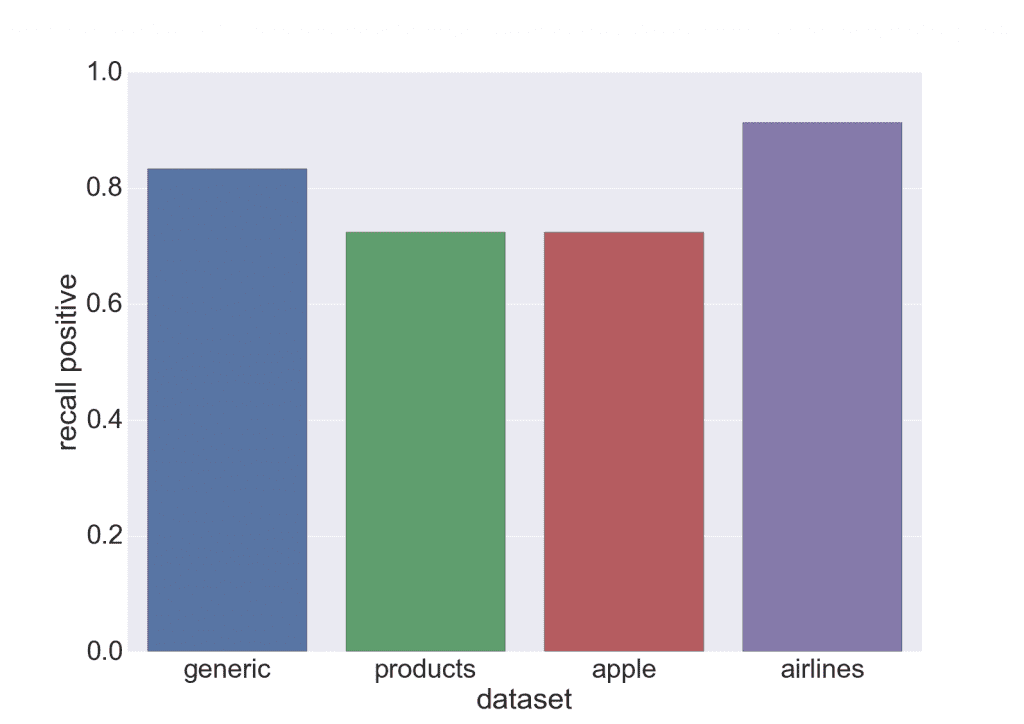

To show you how wide the spread can be among very similar problems, we’ve gone ahead and posted some of our accuracy numbers. We’ve removed the neutral class from these examples because our product only predicts positive vs. negative. This may make our numbers seem artificially inflated compared to others benchmarking on the data. This data should not be used to compare indico to any other services, as such a comparison would be apples to oranges.

If some of those metrics like “precision” and “recall” aren’t familiar to you, don’t worry. The point is to show you how a single model can have such a wide set of responses.

Conclusion

Be extremely wary of benchmarks, especially if a company has gone out and chosen their own dataset. At the end of the day, you want to be able to try it yourself. If you have a specific dataset, you need to make sure the benchmarks are run on the same data that you will be using them on. If you don’t have a dataset at the ready, you should rely on your own qualitative assessment of the model. Chances are, it’ll give you a better sense of the API than any benchmark will.