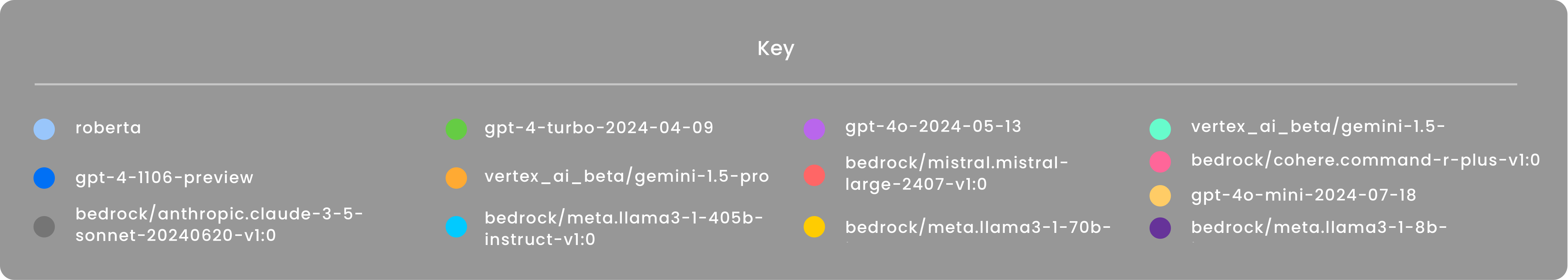

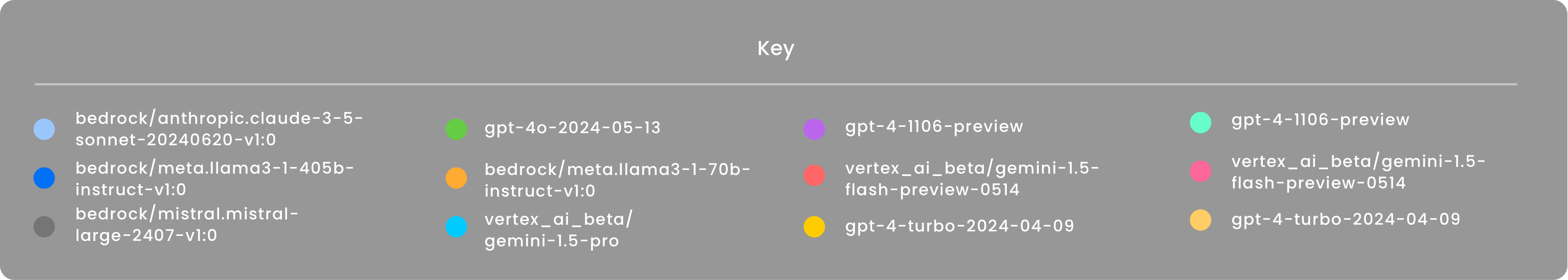

Welcome to the ultimate resource for comparing large language models. Here, we meticulously analyze and present the accuracy, speed, and cost-effectiveness of leading models across critical tasks such as information extraction, clause classification, and summarization.

Indico Data has been a guiding force in the AI industry since its inception, consistently emphasizing practical AI applications and real customer outcomes amidst a landscape often clouded by overhype. Indico was the first in the industry to deploy a large language model-based application inside the enterprise and the first to integrate explainability and auditability directly into its products, setting a standard for transparency and trust.

While the vast majority of LLM benchmarking is focused on chatbot-related tasks, Indico recognized the need to understand the performance of large language models for more deterministic tasks such as extraction and classification, and further to understand the performance and costs based on assumptions related to context length and task complexity.

Blog

Blog